Featured Topics

Featured Products

Events

S&P Global Offerings

Featured Topics

Featured Products

Events

S&P Global Offerings

Featured Topics

Featured Products

Events

S&P Global Offerings

Featured Topics

Featured Products

Events

Financial and Market intelligence

Fundamental & Alternative Datasets

Government & Defense

Professional Services

Banking & Capital Markets

Economy & Finance

Energy Transition & Sustainability

Technology & Innovation

Podcasts & Newsletters

Financial and Market intelligence

Fundamental & Alternative Datasets

Government & Defense

Professional Services

Banking & Capital Markets

Economy & Finance

Energy Transition & Sustainability

Technology & Innovation

Podcasts & Newsletters

Research — Oct. 02 2025

By Greg Macatee

The AI infrastructure market continues to experience remarkable growth, a trend expected to persist well into the future. Based on 451 Research's Voice of the Enterprise: AI & Machine Learning, Infrastructure 2025 survey, this report provides proof points on these topics and presents preliminary market sizing estimates. We also examine a handful of potential technological inhibitors identified by users and highlight the growing role of model and supporting software stacks, where significant untapped value may exist.

Customers continue to show remarkable interest in and uptake of AI technologies across all levels of adoption and skillsets. Many find themselves in relatively earlier stages of their development, while others are growing or even scaling their projects and capabilities. In either case, AI infrastructure remains a critical pillar. While AI infrastructure can be an enabler when planned for and implemented correctly, it also presents the challenge of having multiple single points of failure in the hardware and software stacks, which can derail projects and other initiatives. In response, AI infrastructure users, commercial suppliers and open-source communities are taking notable steps to combat many of these potential pitfalls, in addition to creating incremental value, but there is still work to be done.

Context

AI infrastructure market performance remains strong going into the second half of 2025. It has become a point of emphasis on virtually every earnings call, with vendors providing ever-deeper insight into their current and future levels of performance, which in some cases stretches into multibillion-dollar ranges. Our preliminary analysis of approximately 160 AI infrastructure market participants indicates the market is positioned to generate more than $250 billion in aggregate revenue during 2025. (These figures are subject to change. Discussion of how we view AI infrastructure is provided in this report.)

AI infrastructure market participants include many incumbent data center infrastructure suppliers and hyperscalers, in addition to a growing cadre of specialized GPU-as-a-service providers and neoclouds, whose offerings include a variety of highly tailored AI infrastructure, platforms and services. 451 Research classifies these into three groups in its Tech Trend in Focus: GPUaaS market momentum report. (The first two sets are generally included, with the latter evaluated on a case-by-case basis.) GPU-focused neoclouds include well-known vendors such as CoreWeave Inc., Crusoe, Nebius Group NV and Vultr, among others that offer purpose-built AI infrastructure services such as compute, storage and networking. GPU and general IT/service providers, including Rackspace Technology Inc., Akamai Technologies Inc., DigitalOcean Holdings Inc. and International Business Machines Corp., offer select accelerated services, but these are not their primary line of business. Model-focused overlay providers simplify AI deployment pipelines and enable scalable model access, while abstracting in-depth infrastructure management. They generally do not own their own AI infrastructure and often build their services on top of offerings of the other two groups or hyperscaler offerings. This category includes names such as Baseten, Lightning AI, Modal Labs Inc. and Runpod.

Strong market performance aligns well with ongoing customer momentum and planned future investments, which show virtually no risk of abating, according to our Voice of the Enterprise (VotE): AI & Machine Learning, Infrastructure 2025 survey. In fact, increased spending is expected across all major technologies that form the foundation of AI infrastructure. Over the next 12 months, organizations expect to increase average spending for AI PCs by 22%, servers by 20%, accelerators (GPUs, DPUs and other accelerators) by 20%, storage by 19% and networking by 18%. While predominantly funded by organizations' IT budgets, AI initiatives are important enough as business-critical objectives to receive additional funding from other departments. Over two-thirds of organizations fund 10% or more of their AI infrastructure budgets through these external sources.

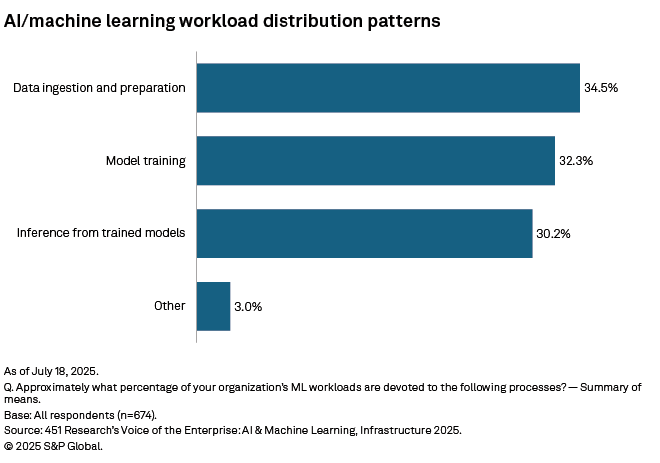

AI workload trends also continue to evolve as organizations create and refine AI projects. According to this same VotE data, organizations report a roughly even split in how their AI infrastructure is used between data ingestion and preparation (35%), model training and fine-tuning (32%), inference (30%), and other operationalized workloads. Looking ahead, it is reasonable to believe that the share of inferencing and other operational workloads will continue to grow and will likely soon overtake both data ingestion and preparation and model training and tuning. This is based on analysis from a set of similar questions asked in our VotE: AI & Machine Learning, Infrastructure 2024 survey, in which respondents indicated a 9-percentage point growth in inferencing (from 15% to 24%) when asked which AI workload will be most demanding on their infrastructure over the next 24 months. This came at the expense of training and tuning, while the percentages of data ingestion and preparation stages remained static. Comparing the two datasets, what is perhaps most noteworthy is that the portion of current inference workloads greatly outpaces expectations of 2024's respondents when asked about their inference workloads in two years' time (30% versus 24%, respectively).

Technology trends

The AI infrastructure market has clear tailwinds and is making notable progress toward broader deployments of inference and reasoning workloads — and eventually agents — but what are the potential risks? Our VotE: AI & Machine Learning, Infrastructure 2025 survey data suggest more than half of customers experience difficulties throughout their technology stacks in compute, storage, networking, data management and availability, and security. This finding is consistent regardless of where their infrastructure is deployed, whether in on-premises data centers, the cloud or edge environments.

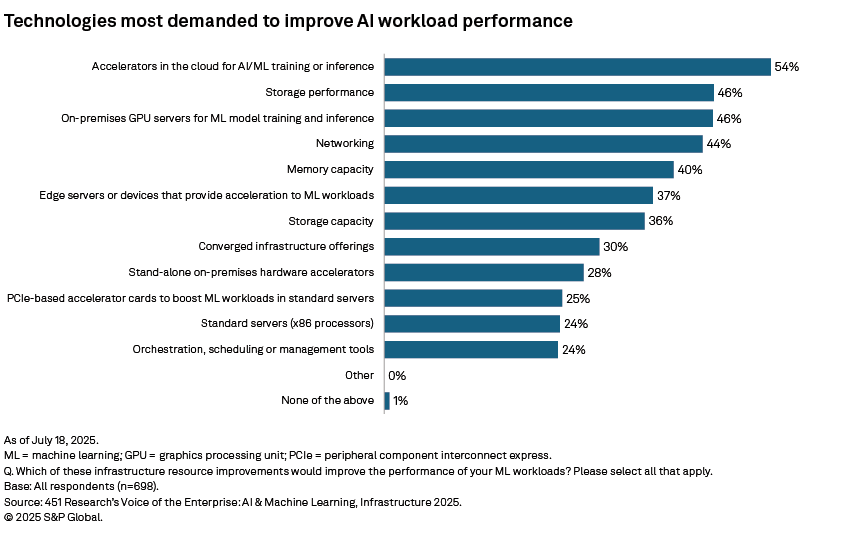

While raw performance influences AI outcomes (GPUs and other accelerators in the cloud were customers' most demanded resource, and on-premises accelerated servers were number three, according to our VotE survey), it is only useful if supplemented by strong and comprehensive data strategies. This spans the entire data life cycle — from ingestion to deletion or archival — and includes aspects such as data management and availability, storage, and security. With data and metadata performance and availability increasingly cited as AI bottlenecks, they bring storage performance and networking into the fold, too. For the latter, this includes both north-south traffic of data in and out of data center environments as well as east-west traffic within them. Similarly, memory limitations can also inhibit training and inference workloads. That models continue to rapidly increase in size — in some cases, even expanding into trillion-parameter ranges — can make each of these bottlenecks significantly more challenging to overcome. They are also all listed among the top infrastructure resources needed to improve workload performance.

Knowing these challenges, it is apparent that there is value in models and their supporting software stacks in addressing them. This ranges from models and model frameworks to other supporting software such as inference frameworks and inference servers. These technologies have benefited from a variety of more recent advancements that help optimize workloads and the massive amounts of data that infrastructure — such as storage, networks and memory — tends to struggle with. For example, compression includes a handful of techniques that reduce the size of models and can lead to improved computational efficiency. Other optimizations, such as kernel fusions, occur at the compiler level within model frameworks. These techniques combine separate computational kernels or sequential mathematical operations into single, more efficient processes. At the inference framework and server levels, techniques such as speculation, disaggregation of prefill and decoding stages, parallelism, and optimized key-value cache management make computations more efficient and better utilize infrastructure resources, specifically GPUs and memory. For key-value cache, this also includes tiering of cache onto "colder" resources such as storage and storage networks.

Beyond improved performance, these techniques also translate to other infrastructure efficiencies, which can become quite sizable when operating at scale. Improved infrastructure efficiency allows users to support the same set of workloads with fewer resources (e.g., servers, storage, networking), which has direct benefits such as lower hardware and software costs, in addition to other more indirect ones such as less maintenance and management overhead and required data center power, cooling and floorspace. Smaller and more efficient models can also capably run on a wider range of hardware, including lightweight configurations deployed in remote locations and at the edge closer to where data is generated.

This article was published by S&P Global Market Intelligence and not by S&P Global Ratings, which is a separately managed division of S&P Global.

Content Type

Products & Offerings

Segment

Language