Featured Topics

Featured Products

Events

S&P Global Offerings

Featured Topics

Featured Products

Events

S&P Global Offerings

Featured Topics

Featured Products

Events

S&P Global Offerings

Featured Topics

Featured Products

Events

Financial and Market intelligence

Fundamental & Alternative Datasets

Government & Defense

Professional Services

Banking & Capital Markets

Economy & Finance

Energy Transition & Sustainability

Technology & Innovation

Podcasts & Newsletters

Financial and Market intelligence

Fundamental & Alternative Datasets

Government & Defense

Professional Services

Banking & Capital Markets

Economy & Finance

Energy Transition & Sustainability

Technology & Innovation

Podcasts & Newsletters

Research — Nov 29, 2024

Agentic AI is the next frontier for GenAI, and a lot is riding on it being realized. Many software providers have highlighted agentic AI's potential for enabling net new revenue streams — a development that investors have been impatiently waiting for. It could also be a step change toward the major productivity gains promised by GenAI, which have largely been unfulfilled thus far. In this report, we introduce core concepts of agentic AI and key areas of development as the way we use AI shifts from passive information processing to active problem-solving.

The fervor for AI agents has taken hold in the enterprise as these new approaches begin to come to life. Models are advancing rapidly, and developments in frameworks, techniques and services are already leading to early-stage commercialized products. Chatbots and virtual assistants are consistently cited as top use cases for GenAI across 451 Research's survey base. But there remains a mountain of hidden complexity, governance challenges, and additional development as these entities become more complex and autonomous. These issues must be proactively addressed — otherwise, this trend may only perpetuate the disillusionment already emerging around GenAI in the short to medium term.

In the longer term, the advancement of agents to human-like contribution value will also necessitate a shift in thinking about and acquiring agent entities. Today, operational cost remains a significant barrier to adoption, given more complex workloads increase burdens on models, and the infrastructure to support them. A new paradigm is likely to emerge that moves away from traditional consumption or subscription models, becoming a function of ROI. We project this to become a massive spending category because the refocus on value, plus the eventual operational cost reductions over time, will enable rationalization of spend.

The rise of modular AI systems

The initial excitement around GenAI centered primarily on the foundation models themselves. These advanced models produced high-quality creative content and demonstrated impressive comprehension, with every new iteration showing rapid advances in memory, speed and, most critically, reasoning, However, as enterprises venture deeper into building and scaling GenAI applications, many are hindered by their practical limitations.

Data limitations. Large language models (LLMs) and foundation models are constrained by the data they are trained on or given direct access to. These models often lack direct access to real-time information, which can hinder up-to-date or accurate responses. Additionally, LLMs are stateless, meaning they do not retain information about past interactions, or the specific context of a conversation.

Reasoning and problem-solving. LLMs can sometimes make logical errors or jump to incorrect conclusions, especially when dealing with complicated reasoning problems. They often struggle with complex mathematical calculations or problems that require deep understanding of mathematical concepts apart from basic arithmetic.

Accuracy. Due to their generative nature, LLMs often produce inaccurate or misleading information, known as "hallucinations." These can occur when the model attempts to fill in gaps in its knowledge or provide a response that sounds plausible while being factually incorrect.

Efficiency. Foundation models are incredibly large and generalist in nature. They often lack deep, domain-specific knowledge, making them computationally intensive (and expensive) for smaller, more specialized tasks.

Development techniques have emerged to work through these limitations. Fine-tuning remains the most popular (cited by 60% of organizations in our AI & Machine Learning, Infrastructure 2024 survey), likely due to its early prominence and the intuitive appeal of optimizing a model for specific needs. Another fast-growing approach for grounding GenAI models is retrieval augmented generation (RAG), which involves combining a large language model with a retrieval system (aka, vector database) that can search and return relevant, factual, and organization-specific information. These two approaches, along with specific AI guardrails (rules and safety constraints), improve the accuracy, relevance and ethical use of generated content, which becomes increasingly critical for content that informs more complex workflow.

There is, however, a more important trend of moving away from monolithic models — where all functionalities are encompassed in a single, large model like an LLM — to more modular AI systems. The monolithic approach, while powerful, presents challenges in scalability, performance and efficiency, as well as overreliance on the sophistication of the core model itself. Modular AI systems, on the other hand, break complex AI tasks down into smaller components that can be handled separately and through multiple interacting tools (like other models, retrievers, data sources). This allows these systems to handle more complex interactions efficiently and facilitate more sophisticated "thought" processes, thus improving quality of output.

Early research shows that providing the structure to help an LLM "think" through a query or request can also drive dramatic improvements in LLM performance, even compared to much larger models. Enter agentic workflows — one of the key paradigm shifts in the embrace of modular AI systems.

Enter agents

An agent is an LLM-powered decision-making engine. It is defined by the ability to ingest information, reason, plan, act, and ultimately, hold memory and learn from their behavior over time. At their core, these agents often have an LLM that is trained to orchestrate the interactions between different AI components or services. Specialization can be achieved through fine-tuning the LLM on relevant data and providing the agent with access to specific tools (calculators, search engines, other models) and resources (databases, email, instructions). At their most advanced, an agent becomes like a digital knowledge worker, capable of autonomously executing workflow, completing tasks, and managing other agents or processes.

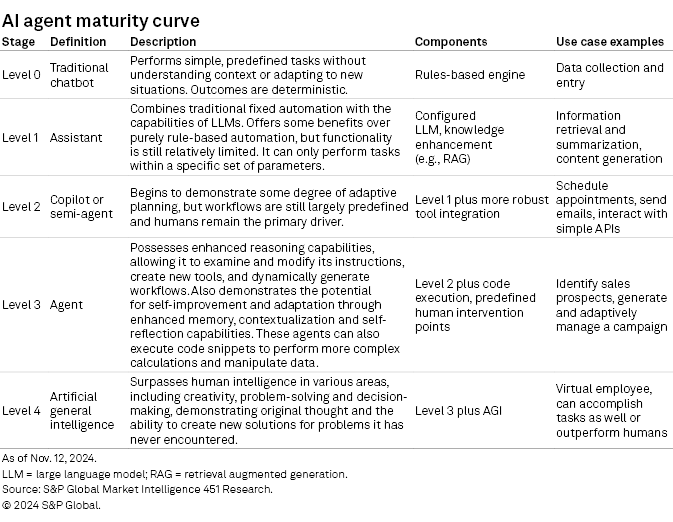

Today's agentic workflows still have an element of predefined, or constrained, automation, with workflows and controls largely manually charted, which is around level 2 on the maturity curve (see figure below). At this stage, these entities do not have the ability to act independently or make choices that would justify the term "agent." Moving to higher levels will most notably require the advancement of the LLMs themselves. Reasoning capabilities will be especially critical to accurately break down tasks and plan, which is a limitation for agents today.

Agent frameworks

An ecosystem of technology vendors and research groups are actively developing the foundational structures and architectures for building and deploying AI agents — agentic frameworks. Many are anchored in one of two primary architectures: reACT agents or function calling agents. The choice depends on the nature of the application and the desired balance of flexibility, efficiency and reliability. Hybrid agents can combine the strengths of both.

ReACT agents. Rooted in the reACT logic, this approach boils down to a robust prompt that has been engineered to turn LLMs into structured reasoning engines. The LLM is led to take in a query, reason over it, select an applicable tool, take action, and observe its own response, often looping through this sequence until producing the desired output. ReACT agents are highly customizable, and ideal for more sophisticated applications. They do, however, place a significant development burden on an organization's engineering team.

Function calling. In contrast, function calling agents rely on the LLMs themselves to play the role of tool selection. When presented with a query, the models determine which tools are most relevant, and select the appropriate action from a predefined list of options. Many commercially available LLMs support tool calling and have been fine-tuned with the logic for determining which tools to select. These agents, while less customizable, provide a more direct and efficient approach in many scenarios.

Research teams across the ecosystem have been developing more robust commercial and open-source agent frameworks optimized for different tasks and use cases. This can involve adding other supporting functions such as conversational memory for a chatbot application, or the ability to support parallel functioning for a medical diagnostics use case. The possibilities are vast, and new approaches are rapidly emerging, with varying degrees of specialization.

It has been observed that agents become more performant when coded for a narrower set of tasks and domains. There is significant development around multi-agent architectures, whereby multiple agents, each with specialized instructions and tool bases, are called upon to handle specific tasks or components of tasks. A simple example would be a hierarchical multi-agent setup, where an orchestration agent may prompt a coder agent and a critic agent to sequentially develop and debug code, producing higher-quality output.

This addresses challenges inherent to single-agent architecture, such as the performance and efficiency limitations of a generalist agent. Some examples of prevalent multi-agent frameworks are OpenAI LLC's Swarm project, crewAI Inc. and Microsoft Corp.'s AutoGen. Others that play heavily in agent frameworks are LangChain, LlamaIndex, Phidata and foundation model companies.

It bears mentioning that as agentic architectures develop, so does the need for robust governance structures to ensure these entities are compliant and safe to use at scale. Unlike LLMs, which primarily process and generate text, AI agents can interact with the environment, make decisions, and potentially take actions. As these agents grow more autonomous and capable, questions will arise about accountability, bias and potential misuse.

This places higher emphasis on strong governance frameworks, like clear guidelines for agent behavior, robust security measures, and regular audits of AI agent systems. Although MLOps focuses on the life cycle of machine-learning models, AgentOps, a newer concept, extends these principles to AI agents, focusing on their training, deployment and ongoing management. Ultimately, a well-governed AI agent ecosystem is crucial to harnessing the benefits of these technologies, while minimizing potential drawbacks.

The evolving ecosystem

An increasingly crowded ecosystem of vendors has emerged to commercialize around agentic AI. Productivity vendors like Salesforce Inc., Atlassian Corp. and Adobe Inc.; search and knowledge management providers like Vectara Inc., GemShelf Inc.'s Shelf.io and Sinequa; hyperscalers like Google LLC, Microsoft, International Business Machines Corp. and Amazon.com Inc.; and foundation model companies like Anthropic PBC and OpenAI; and a raft of agent pure plays like Chain.ML, Cognition.ai, Adept AI Labs Inc.'s Adept.ai, Simbolica, Graft and Leena AI Inc.'s Leena.ai are all developing products, contributing research, and solidifying their positioning at various stages of the agent value chain. We anticipate a substantial amount of disruptions and consolidation over the months to come, as the opportunities crystalize and capabilities advance.

.

This article was published by S&P Global Market Intelligence and not by S&P Global Ratings, which is a separately managed division of S&P Global.

451 Research is a technology research group within S&P Global Market Intelligence. For more about the group, please refer to the 451 Research overview and contact page.

Location

Products & Offerings

Segment