Featured Topics

Featured Products

Events

S&P Global Offerings

Featured Topics

Featured Products

Events

S&P Global Offerings

Featured Topics

Featured Products

Events

S&P Global Offerings

Featured Topics

Featured Products

Events

Financial and Market intelligence

Fundamental & Alternative Datasets

Government & Defense

Professional Services

Banking & Capital Markets

Economy & Finance

Energy Transition & Sustainability

Technology & Innovation

Podcasts & Newsletters

Financial and Market intelligence

Fundamental & Alternative Datasets

Government & Defense

Professional Services

Banking & Capital Markets

Economy & Finance

Energy Transition & Sustainability

Technology & Innovation

Podcasts & Newsletters

Research — Sept 30, 2024

By Melissa Incera and Dan Thompson

Generative artificial intelligence is experiencing explosive growth. Its rapid adoption, however, comes at a cost. Training and running these complex AI models requires massive amounts of processing muscle, putting a significant strain on the datacenter, infrastructure and energy resources needed to keep them operational. This creates a crucial challenge — how can the sustainable development of GenAI be achieved without compromising its potential?

Major datacenter buildouts to accommodate rising AI demand are already underway, but questions about exactly what to build, and how much, remain unanswered. GenAI adoption is happening at rates faster than we have seen with any other emerging technology — technology vendors themselves are investing billions of dollars in the transition for fear of being unprepared to capitalize on it. However, there are datacenter companies and investors remembering the dot-com era, where overblown projections of datacenter needs led to a glut of unused space when the bubble burst, and these companies are fearful of overbuilding. Already, there is a reasonable amount of skepticism over when, if ever, capital expenditures will generate comparable returns, as well as growing disillusionment over GenAI's applicability. Will it be a costly overreaction or a serious underestimation? Which way the pendulum swings will decide the fate of billions of dollars.

Rise of the AI datacenter

General compute and traditional AI both consume a significant amount of the world's energy resources, but the rise of GenAI is only intensifying this relationship. Industry consensus is that generative queries and workloads consume anywhere between 10 to 30 times more energy than traditional or task-specific AI, given the size and complexities of these models, and the computational demand of generating net new content as an output. In addition, these processing burdens have placed a strain on supporting systems, like data storage and networking, increasing their physical and carbon footprints in step.

All this has given rise to the AI datacenter, specialized facilities designed to handle AI's unique demands. These centers house massive amounts of computing power, often in the form of specialized hardware like GPUs, to handle the complex calculations required for training and running AI models. They also bring to bear more robust networking to facilitate the vast flow of data between the now higher-performance servers and storage systems.

Today, most AI development is happening in hybrid environments — very few datacenters are equipped for full AI or GPU-based compute. While AI infrastructure is certainly being deployed, it is not yet being deployed at a mass scale outside of a few specialized projects (Microsoft Corp./OpenAI LLC Stargate). This will likely change over time.

Even in these hybrid datacenters, incorporation of this specialized AI infrastructure represents a step change in resource consumption. Previously, a single server rack might consume the energy equivalent of a typical home. With the advent of GPU-powered computing, however, we are seeing racks consuming over 10 times that amount, while AI data halls can approach energy demands comparable to entire neighborhoods.

This escalating power consumption is also impacting construction costs, with building costs alone jumping from roughly $6 million to $8 million to $10 million to $15 million and higher per megawatt of capacity. Additionally, more powerful infrastructure components place pressure on secondary elements like cooling due to the immense heat generated by high-performance computing, where fiscal and social costs are also high.

The illusive challenge of scale

To accommodate future demand, scaling is a must. Given the lead time required to build new datacenters, accurately forecasting demand years in advance is tricky. Several factors increase the complexities of these projections:

Scale of interest. 451 Research's recent AI & Machine Learning Infrastructure 2024 survey shows only 4.5% of organizations not experimenting with GenAI in any capacity, while 18% report it to be integrated fully across their organization. This leaves the vast majority of enterprises (76%) somewhere in the middle, representing unfulfilled future demand.

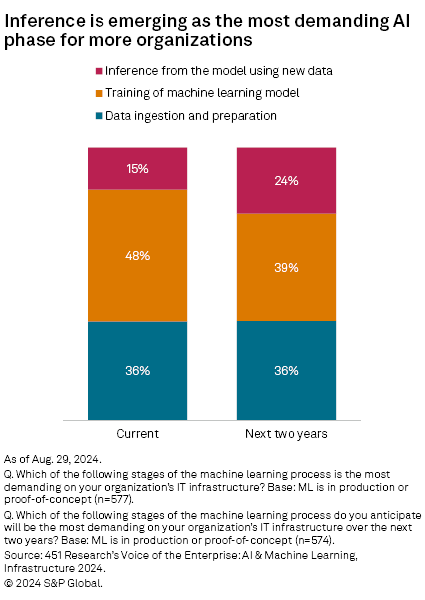

Market maturation. Today, GenAI workloads are dominated by training. As organizations get further along in their deployments, our data shows a shifting focus from training to inference — or putting trained models into action (see figure below). Although inferencing is less resource-intensive in theory, it has the potential to outweigh training demands through sheer scale. Additionally, much of it represents new resource demand. OpenAI's flagship ChatGPT, for instance, is estimated to be handling over 10 million queries per day (inferencing), even as it continues to develop and train new models.

New applications. GenAI use cases are quite simplistic today, but applicability is expanding. Our AI & Machine Learning Use Cases 2024 survey shows that organizations expect to add capabilities in image, video and structured data generation alongside text within the next 12 months. Additionally, we anticipate the growth of more complex use cases like AI agents, where a single query might require a cascade of API calls to large language models and other models to solve a singular problem.

These three compounding factors make it difficult to see where the point of inflection will be as it relates to demand for GenAI and AI broadly. Some technology providers close to the action are going full bore. Microsoft and OpenAI, for example, are jointly investing in a $100 billion datacenter project expected to take five to six years to complete, housing an AI supercomputer called Stargate. Alphabet Inc.'s Google recently stated that the risk of underinvesting in GenAI is higher than that of overinvesting. Others have been more conservative, opting to let front-runners make the big capital expenditures.

The sustainability quotient

In January, the International Energy Agency forecast that in the next two years, energy usage for AI and cryptocurrency sectors — which represented almost 2% of global energy demand in 2022 — could double to 4% by 2026. This would make datacenter demand equivalent to the amount of electricity used by the country of Japan.

Such a large societal impact makes us wonder: To what degree is this sustainability a factor as organizations develop their AI strategies? On this, the data is mixed. In our AI & Machine Learning 2024 survey, virtually all organizations (90%) said sustainability factored into their infrastructure decision-making in some capacity, reflecting a small decline from the prior year. What is more telling, however, is that sustainability this year ranked as less critical in infrastructure decision-making than data privacy, flexibility and scalability requirements.

Similarly, when we asked in an earlier survey what aspects of AI projects were actively being tracked, energy consumption (one of the biggest sustainability considerations) was cited as a key performance indicator by only 25% of organizations. This suggests that while sustainability is a concern for organizations in theory, most are prioritizing AI performance and potential over optimization of longer-term concerns at this stage in the development curve.

The data also suggests enterprises are relying on their technology providers to solve these issues, making AI more efficient, practical and cost-effective. Most are incentivized to do so. LLM providers like Meta Platforms Inc., Mistral AI SAS and Anthropic PBC continue to push out new models that grow more efficient, even as they grow more performant. NVIDIA Corp. claims its latest round of Blackwell processors can run GenAI models at 25 times less cost and energy consumption than its predecessor.

Even the most sustainability-minded providers are pushing their targets further into the future, at the risk of missing out on revenue and market leadership. Google, in January 2023, announced it was no longer maintaining operational carbon neutrality, transitioning away from a carbon credit strategy it implemented in 2007 as its emissions, and those of its partners, have soared. Microsoft, which in 2020 committed to becoming carbon-negative by the end of the decade, saw its greenhouse gas emissions increase by about 30% in fiscal year 2023.

Conclusion

Will inference-type workloads consume even more energy than the training workloads we have seen so far, or will software breakthroughs allow less energy-hungry CPU-based compute to lessen the blow? Will the next generation of NVIDIA systems enable companies to "do more with less," or will companies simply continue to "do more with more" in terms of energy consumption? Furthermore, will additional processing breakthroughs radically change our outlook on future energy consumption? Will the pace of datacenter demand taper off as any of the above happens, allowing the tech giants to regain lost ground for their sustainability goals?

Each of these questions continues to be hotly debated, so perhaps it is worth returning to the current reality. Even before the boom of GenAI, hyperscalers were picking up the pace of development. Without additional demand sources, existing datacenters and build pipelines are already creating challenges for the world's power grids in a very localized manner, and it is already unsustainable — so now what?

This article was published by S&P Global Market Intelligence and not by S&P Global Ratings, which is a separately managed division of S&P Global.

451 Research is a technology research group within S&P Global Market Intelligence. For more about the group, please refer to the 451 Research overview and contact page.

Location

Segment