Featured Topics

Featured Products

Events

S&P Global Offerings

Featured Topics

Featured Products

Events

S&P Global Offerings

Featured Topics

Featured Products

Events

S&P Global Offerings

Featured Topics

Featured Products

Events

Financial and Market intelligence

Fundamental & Alternative Datasets

Government & Defense

Banking & Capital Markets

Economy & Finance

Energy Transition & Sustainability

Technology & Innovation

Podcasts & Newsletters

Financial and Market intelligence

Fundamental & Alternative Datasets

Government & Defense

Banking & Capital Markets

Economy & Finance

Energy Transition & Sustainability

Technology & Innovation

Podcasts & Newsletters

Research — 25 Oct, 2023

By Nick Patience and Alexander Johnston

It was more than six months ago that a letter signed by a group including Elon Musk and Steve Wozniak called for a half-year pause on generative AI development. As our monthly updates can attest to, this letter failed in its objective. The last six months have seen an array of announcements from the companies that kicked off the trend — OpenAI, Microsoft Corp. and Google — as well as the entrance of specialist startups and established technology companies. This month continues this trajectory, with releases from many of the better-known generative AI companies and a number of launches from new entrants — increasingly companies outside of the US. To keep pace, these digests are augmented with various reports on product releases from vendors that you can find as part of our Market Insight service.

Additionally, the US fall technology conference season started with Google Cloud Next in San Francisco, the last before it moves to Las Vegas in 2024; Salesforce Inc.'s Dreamforce, which also made noise about leaving San Francisco; and Oracle Cloud World, which moved to Las Vegas from San Francisco in 2022. Besides the threat to San Francisco's conference business, what is interesting is the many generative AI announcements made by the vendors and others.

Product releases and updates

Stability AI announced music and sound generation tool Stable Audio. The free version can be used to generate tracks of up to 45 seconds, with a paid subscription extending the length to 90 seconds and allowing for the track's use for commercial projects. It is primarily designed to support musicians looking to include samples within their music, but examples presented by the company include the generation of background sounds such as cars passing.

Microsoft announced the unification of many of its AI investments in Microsoft Copilot. Available initially in Windows 11, and for Microsoft 365 web browsers Edge and Bing in autumn, the AI "companion" is designed as a conversational interface that can respond to queries — informed by both web and corporate data — and take actions. The company says Bing will add support for text-image model DALL.E 3. OpenAI announced DALL.E 3 on the same day Microsoft made its announcements, declaring the model to be in research preview, and available to ChatGPT Plus and Enterprise customers in October.

Google announced generative AI product enhancements at its Google Cloud Next conference. Prominent among them was its Duet AI assistant, which is generally available across its Workspace apps and is in an expanded preview release as part of the Google Cloud Platform, where it is used to generate code and in GCP's Security Cloud. Google also announced new models from Anthropic and Meta Platforms Inc. to its Vertex service and upgrades to Google's PaLM, Codey and Imagen models. Vertex AI Search and AI Conversation are both generally available, and the company announced the preview of inference on Cloud TPU v5e, aiming for greater code efficiency and scalability than earlier generations of the AI accelerator.

Oracle Corp. unveiled Oracle Cloud Infrastructure (OCI) Generative AI, a service hosted on Oracle's cloud that gives access to models from Oracle partner Cohere. The models are fully hosted on OCI, so there is no calling back to Cohere. The models are Cohere's Command, Summarization and Embeddings models, for which Oracle sees a variety of use cases, including chatbots, summarization and search — customers can also bring their own models that weren't based on Cohere's models. The two companies have a multiyear agreement that includes embedding generative AI into Oracle's numerous applications, including the Cerner healthcare products and Fusion HCM. Oracle is using dedicated hardware clusters that include NVIDIA Corp. GPUs and is promising predictable pricing. OCI Generative AI is in beta now and will be generally available later this year.

OpenAI launched ChatGPT Enterprise, a version of its famous chatbot service complete with enterprise levels of security and privacy, as well as unlimited usage and performance improvements. No customer data, including prompts entered into ChatGPT Enterprise, will be used to train the underlying GPT-4 model, and the services include workflow templates and API credits. In launching this product, OpenAI is effectively competing against major investor and technology partner Microsoft, with its Azure OpenAI Service also based on GPT-4. OpenAI didn't divulge any pricing details for its new service, but its launch coincided with reports that it is running at a $1 billion annual run rate, which is a dramatic uplift from the $28 million it reportedly generated in 2022.

Salesforce's Einstein 1 Platform is an AI-enabled layer that works with the company's Data Cloud to bring together data sources both structured and unstructured by mapping it to Salesforce's metadata system. Einstein 1 includes Einstein Copilot. For users, this means an assistant alongside their Salesforce environment, while Salesforce administrators also get access to prompt-builder, skills-builder and model-builder tools to help enhance the user experience, especially when users aren't getting the answers they need. In short, it enables admins to generate prompts and tweak the underlying models.

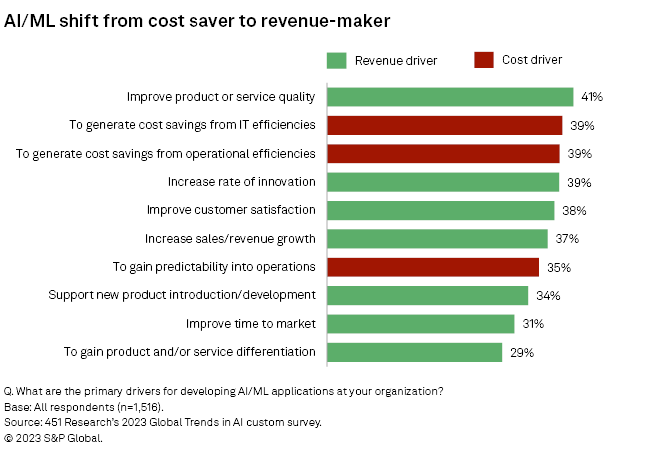

Meta announced Code Llama, which is positioned as a code-focused version of Llama 2 that is able to generate code and natural language. Code Llama is available in three sizes — the smallest with 7 billion and largest with 34 billion parameters — alongside two additional variants: Code Llama – Instruct, which has had additional instruction tuning to better align generation with safe or helpful outputs, and the language specialized Code Llama – Python. As the figure below illustrates, Meta's models being free for commercial use is important, with many organizations keen to use AI as a means of generating revenue.

An AI companion has been released for Zoom Video Communications Inc. with the announcement suggesting the tool will be able to summarize video meetings and compose chat messages. For any customer in regions where the service has been rolled out, using paid services will gain access to the companion free of charge for those services.

The first chatbots approved under China's new regulatory framework were released in late August. Baidu Inc.'s ERNIE, ByteDance's Doubao, and chatbots from Baichuan and Zhipu AI are now available for general use. OpenAI's ChatGPT is not officially available in China, and channels that provide access have been clamped down upon by regulators. Tencent Holdings Ltd. announced the availability of its Hunyuan LLM, with Vice President Jiang Jie saying it will be competing with over 130 LLMs already available in China.

A new LLM from the Technology Innovation Institute (TII), part of the government of Abu Dhabi's Advanced Technology Research Council, is claimed to be the "largest openly available language model" and is available via Hugging Face. Falcon 180B features 180 billion parameters and was trained on 3.5 trillion tokens on up to 4,096 GPUs simultaneously, taking about 7 million GPU hours, according to TII. This means Falcon 180B is 2.5 times larger than Llama 2 and was trained with four times more compute, although whether that is something to boast about, we are not sure. The training set was mainly from RefinedWeb, plus some additional curated data including conversations, technical papers and a small amount of code, composing about 3% of the total set. TII released a chat model, fine-tuned for conversations and based on Falcon 180B. An Abu Dhabi state-backed AI company is apparently also in the cards.

An Arabic and English LLM was released in late August. The open-source model Jais was released in partnership between G42's Inception, Mohamed bin Zayed University of Artificial Intelligence, and Cerebras Systems. Jais is not the first open-source language model to emerge from the UAE — the aforementioned Falcon LLM was released in March — but it is the first from the region pretrained in Arabic and is engineered to represent regional perspectives and context.

Adept AI has open-sourced its Persimmon-8B LLM, which it calls "the best fully permissively licensed model in the 8B class." Adept is in the process of building what it calls AI agents to automate all sorts of tasks, and says it is not in the business of shipping stand-alone LLMs, so it has open-sourced it. The model has a context size of 16k, and Adept claims it outperforms other models in its size class (generally those with fewer than 10 billion parameters) and can (in some cases) match Meta's LLaMA2 models, despite having seen only 37% as much training data.

Consulting and advisory firm EY launched EY.AI, a set of technologies and human capabilities to enable its customers to adopt AI. The company claims EY.AI is the result of investments totaling $1.4 billion, including embedding AI into its proprietary EY Fabric technology platform, as well as some unspecified technology acquisitions. EY is rolling out its own LLM called EY.ai EYQ, which has been tested with 4,200 EY employees. Microsoft provided EY with early access to Azure OpenAI to enable EY to build generative AI apps, and it also cites partnerships with Dell Technologies Inc., International Business Machines Corp., SAP SE, ServiceNow Inc., Thomson Reuters Corp. and UiPath Inc.

Funding and M&A

Amazon Web Services is to invest up to $4 billion in generative AI foundation model provider Anthropic in the form of cloud credits and a minority stake and becomes its "primary cloud provider," giving Anthropic access to AWS Trainium and Inferentia custom accelerator chips to develop its foundation models, the most recent of which is Claude 2. Anthropic will enable its Claude AI assistant to be customized and fine-tuned on AWS and available via its Amazon Bedrock service. Anthropic staff will be involved in the development of future AWS Trainium and Inferentia technology, and Amazon's developers will be using Anthropic's models in their own development.

AI21 Labs has received $155 million in a series C funding round, bringing total funding to $283 million. The Israel-based startup has developed a family of foundation models, as well as specialized models focused on specific tasks, citing knowledge and support platforms, retail software, and content management systems as application areas.

Hugging Face raised a £235 million series D round at a valuation of $4.5 billion. The investors were led by new investor Salesforce Ventures and included other new investors Alphabet Inc., Advanced Micro Devices Inc., Amazon.com Inc., IBM, Intel Corp., NVIDIA, QUALCOMM Inc. and Sound Ventures. It brings Hugging Face's total raised to $395.2 million. Its previous round in April 2022 had a post-money valuation of $2.0 billion.

Writer announced series B funding of $100 million, led by ICONIQ Growth. Writer has its own family of LLMs known as Palmyra, with a focus on smaller, cost-effective models. The round was raised at a post-money valuation of more than $500 million, with Insight Venture Management and Aspect Ventures as returning investors. Other participants included new investors Balderton Capital (UK), WndrCo Holdings, Accenture PLC and The Vanguard Group.

Imbue, formerly known as Generally Intelligent, raised a $200 million series B round co-led by returning investor Astera Institute and joined by new investor NVIDIA. The Palo Alto, Calif.-based company plans to build LLMs that can "reason and code" and power "practical AI agents that can accomplish larger goals and safely work for us in the real world," according to a company blog post. The round brings the total raised to $220 million, at a post-money valuation of $1 billion.

AWS acquired Hercules Labs, which offers a developer-enablement product, Fig. With a command-line auto-completion capability, the acquisition — for an undisclosed amount — is perceived to align well with AWS' code generation efforts. AWS announced Amazon CodeWhisperer in June, a tool that provides code suggestions. The preview supports Python, Java and JavaScript, and a number of IDEs.

Conversational AI specialist Aivo was acquired by TimeTrade Systems, better known as Engageware. Aivo had an integration with ChatGPT that it paired with its own technology, to support conversational banking, conversational commerce and telecommunications sales, and customer service requirements. Engageware suggests Aivo has 100 employees, 200 customers and 150 million users.

Politics and regulations

A closed-door "AI safety forum" took place in September in Washington, bringing together technology figures and US senators to discuss AI regulation. Sen. Chuck Schumer (D-NY) suggested that there was broad agreement as to the government having an oversight role for AI but little accordance as to what regulation should be instigated.

The UK's Competition and Markets Authority (CMA) released its initial report into AI foundation models. The report outlines a number of competitive uncertainties — warning that changing importance around proprietary data or improved performance of leading models could lead to consolidation. The report also outlines a set of guiding principles for foundation models, suggesting that developers and deployers should be held accountable for outputs. Among the more interesting aspects of the guidance was to support open-source models to reduce barriers to market entry and to explore interoperability so that firms can work with multiple foundation models. Next steps are for the CMA to engage with consumer groups, leading foundation model developers, major deployers of FMs, challengers and new entrants, academics and other experts, and fellow regulators in the UK and abroad. It promises an update on the thinking behind its principles by early 2024.

Cédric O, formerly secretary of state for the Digital Sector of France and prominent figure in Emmanuel Macron's party La République En Marche, is one of a number of notable politicians in the EU to have expressed concerns in the last few weeks around the AI Act. In particular, he raised fears that the legislation will hinder attempts to develop foundation models in Europe. These contributions come at an important time as the Council of the European Union and European Parliament have yet to agree to a common text, with both having a separate version of the act.

Salesforce released the findings of a survey of 4,000 people spread between the US, the UK, Australia and India that found, among other things, that 65% of generative AI users are millennials or Gen Z, and 72% are employed, while 68% of nonusers are Gen X or baby boomers. Salesforce says this points to a cohort of "super users," otherwise known as young, employed people.

This article was published by S&P Global Market Intelligence and not by S&P Global Ratings, which is a separately managed division of S&P Global.

451 Research is part of S&P Global Market Intelligence. For more about 451 Research, please contact .