Featured Topics

Featured Products

Events

S&P Global Offerings

Featured Topics

Featured Products

Events

S&P Global Offerings

Featured Topics

Featured Products

Events

S&P Global Offerings

Featured Topics

Featured Products

Events

Financial and Market intelligence

Fundamental & Alternative Datasets

Government & Defense

Professional Services

Banking & Capital Markets

Economy & Finance

Energy Transition & Sustainability

Technology & Innovation

Podcasts & Newsletters

Financial and Market intelligence

Fundamental & Alternative Datasets

Government & Defense

Professional Services

Banking & Capital Markets

Economy & Finance

Energy Transition & Sustainability

Technology & Innovation

Podcasts & Newsletters

Research — March 10, 2025

Ellie Brown and and Jean Atelsek

S&P Global Market Intelligence 451 Research is a technology research group within S&P Global Market Intelligence. For more about the group, please refer to the 451 Research overview and contact page.

There are no "comps" in the generative AI universe — this is a new market, and cloud providers eager to justify their AI investments have little to go on when setting prices for their platforms and services. Stock keeping unit (SKU) changes by Amazon.com Inc.'s Amazon Web Service, Microsoft Corp.'s Azure and Alphabet Inc.'s Google Cloud logged by 451 Research's Cloud Price Index in 2024 — over 2 million services added, services removed, price increases and price decreases — reveal that, for now at least, the big three hyperscalers are relaxing what they are charging for generative AI.

Although we have heard plenty about record capital investments by AWS, Microsoft and Alphabet Inc. to power AI-driven services, less has been said about how prices are evolving to adapt to market demand and competitive pressure. Recent announcements — that Microsoft is adding a free tier for its GitHub Copilot coding assistant, for example, or Google's decision to drop the $20 per month add-on fee for AI features in its Workspace products — point in the general direction of rates for hyperscalers' AI services, which is downward. Gone are the days when the big cloud providers would publicize price cuts to tempt users onto their platforms — changes in AI/machine-learning service pricing have largely gone under the radar. Although the three parent companies apply AI technology across their portfolios (cloud and non-cloud), a look at pricing adjustments to their cloud platforms for building and training AI applications suggests that demand has been underwhelming.

AWS, Azure and Google Cloud made over 2 million SKU changes in 2024

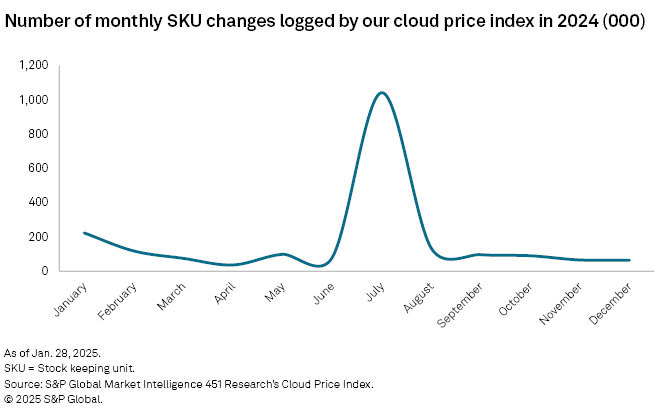

In addition to publishing quarterly cloud price benchmarks, 451 Research's Cloud Price Index tracks SKU changes — services added and removed, price increases and decreases — by the cloud hyperscalers on a monthly basis. Each month, the cloud providers' SKU-level service portfolios and prices are compared with those pulled the month before and then sorted into categories by service type and region to highlight trends taking place within the data.

Total change levels across the hyperscalers usually hover at roughly 100,000 changes or less each month, although in July 2024, we tracked a massive upswing driven almost entirely by the rollout of a new pricing structure for Red Hat's RHEL-based Amazon Elastic Compute Cloud instances on AWS. Most of the time, SKU changes consist almost entirely of service additions, although service removals and price changes are also included in the total change count.

While the top-level view of cloud service change activity can offer insight into large portfolio shake-ups, such as the one in July, cutting into the data can offer a closer look at specific service types and the way in which hyperscalers are addressing unique markets. Our Cloud Price Index tracks not only services under the compute umbrella (e.g., virtual machines of various types, purchase options, and regions), but also services in categories such as database, storage, analytics, networking, security — and AI.

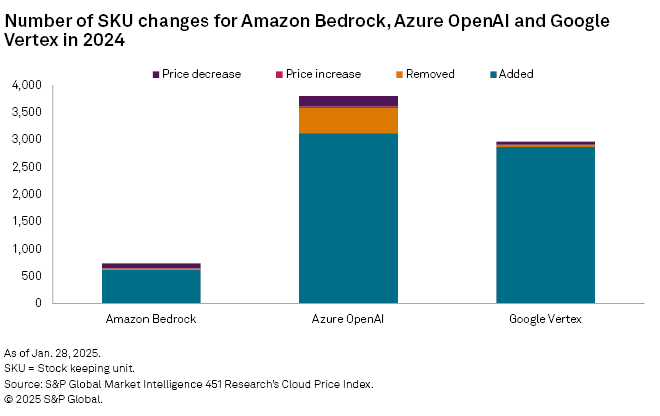

Amazon Bedrock, Azure OpenAI and Google Vertex: Thousands of SKUs added, but price cuts too

Make no mistake: The hyperscalers have released AI platform features at a good clip. They added AI services to cloud regions in 27 different countries in 2024, with service additions outnumbering price changes by a factor of 20 to one. This is based on keyword searches across all of the monthly SKU changes logged by our Cloud Price Index for 2024. This method has limitations — for example, if a company adds a SKU one month and removes it the next, that would count as two changes even though they cancel each other out. In some cases, SKU changes (including price changes) are made to correct errors. Still, it offers a decent proxy for showing where, geographically and category-wise, the providers are modifying their portfolios and pricing.

While the hyperscalers provide numerous services that fall under the generative AI umbrella, each also offers its own managed service for building, deploying or managing AI models. These services, Amazon Bedrock, Azure OpenAI and Google Vertex, all saw SKU additions last year — and each also saw notable price cuts over the course of the year.

Amazon Bedrock is a managed service that offers users access to multiple foundation models via a unified API, making it possible to evaluate and customize them on a pay-as-you-go basis. For text-based models, pricing depends on the number of input and output tokens, which represent the chunks of text going into and coming out of the model for a GenAI application.

Usage for several of these models logged significant price cuts in 2024. Input and output token rates for Llama3 fell by an average of 48% in four separate months (June, August, November and December); for Mistral Large, by an average of 48% in June and September; and for AWS' Titan Text G1 – Express and Lite models, by an average of 61% in May. In December, AWS announced an 80%-85% reduction in the cost of its Amazon Bedrock Guardrails service, which features configurable safeguards for blocking harmful content and filtering out hallucinations, in all regions where the service is available.

Azure OpenAI is Microsoft's cloud-based service for building and deploying AI models using technology jointly enabled by Microsoft and OpenAI, including GPT (primarily for text and audio generation) and DALL-E (for image generation). Last year, our Cloud Price Index picked up 187 rate cuts, averaging 39%, for input and output tokens, hosting units (the deployed capacity required to run a model) and fine-tuning. More than two-thirds of the price reductions were for Azure OpenAI's newer GPT-4 models (of which there are several variants).

In November, Microsoft launched Azure OpenAI Data Zones in the US and EU for customers that need to ensure that data processing happens within those geographies — in December, the company announced a new provisioned deployment type for Data Zones and reduced the price for tokens by 9%-50% depending on the model, region and type (cached or uncached). Worthy of note: Copilot, Microsoft's brand for its OpenAI-enabled developer, productivity and security tools, had no price reductions in the Azure OpenAI SKUs we collected for 2024.

Google Vertex is Google Cloud's platform for creating, deploying and managing AI models and applications. In May, the company reduced by two-thirds the cost of video embeddings for its self-developed Gemini 1.5 multimodal large language model. In August, it implemented 80%-plus rate cuts for text, audio, image and video input as well as text output for Gemini 1.5 Flash, the speediest and cheapest version of the model (Gemini 1.5 Pro is the other version). In October, the company followed with 67%-75% price reductions in those services for Gemini 1.5 Pro.

Like all foundation model vendors, AWS, Azure and Google Cloud put limits — or quotas — on various components required to run AI applications. These can apply to the number of tokens per minute, requests per minute, volume of resources deployed (such as file sizes and number of data sources), and other measures. The goal is to offer customers a fair shot at using their models, but also, given the detailed telemetry the providers use to monitor and charge for access to these compute-intensive services, to ensure that hosting AI models is not compromising profitability. In a sense, the hyperscalers are feeling their customers' pain as they struggle to create AI applications at price points that deliver a sustainable margin..

This article was published by S&P Global Market Intelligence and not by S&P Global Ratings, which is a separately managed division of S&P Global.

451 Research is a technology research group within S&P Global Market Intelligence. For more about the group, please refer to the 451 Research overview and contact page.

Location

Products & Offerings

Segment