Featured Topics

Featured Products

Events

S&P Global Offerings

Featured Topics

Featured Products

Events

S&P Global Offerings

Featured Topics

Featured Products

Events

S&P Global Offerings

Featured Topics

Featured Products

Events

Financial and Market intelligence

Fundamental & Alternative Datasets

Government & Defense

Professional Services

Banking & Capital Markets

Economy & Finance

Energy Transition & Sustainability

Technology & Innovation

Podcasts & Newsletters

Financial and Market intelligence

Fundamental & Alternative Datasets

Government & Defense

Professional Services

Banking & Capital Markets

Economy & Finance

Energy Transition & Sustainability

Technology & Innovation

Podcasts & Newsletters

24 Feb, 2025

By Iuri Struta

| NVIDIA will report its earnings results on Feb. 26. Source: NVIDIA Corp. |

As NVIDIA Corp. gears up for its highly anticipated earnings report, market participants are keenly focused on any insights regarding the shipment progress of its latest server chip, Blackwell.

"The most fundamental question for NVIDIA now is Blackwell," said Melissa Otto, head of technology, media and telecom research at Visible Alpha, a part of S&P Global Market Intelligence. "There is a lot of uncertainty."

This uncertainty is reflected in Wall Street sales forecasts — analysts expect Blackwell sales for NVIDIA's fourth fiscal quarter to range between $6 billion and $19 billion, according to Visible Alpha.

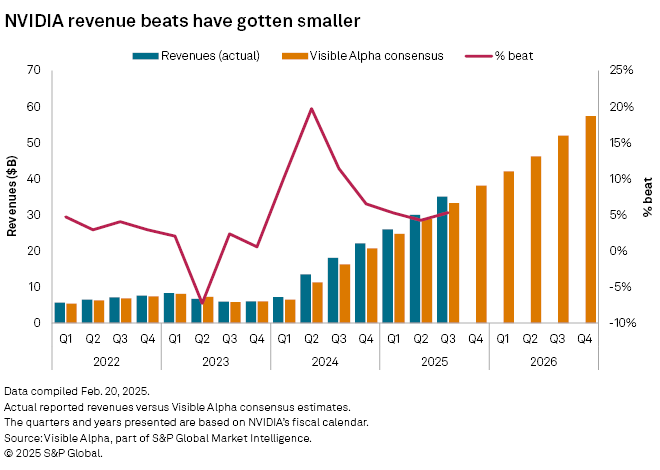

With demand for NVIDIA chips skyrocketing in recent years, analysts have had trouble accurately predicting revenue growth. In fiscal 2024, which corresponds to calendar 2023, NVIDIA beat consensus estimates by double digits in three of the four quarters. As revenue growth slowed, revenue beats also became smaller in fiscal 2025.

Analyst opinions diverge significantly about NVIDIA earnings. The most pessimistic analyst projects NVIDIA revenue to start declining in fiscal 2027, while the most optimistic expects a tripling from fiscal 2025 levels, according to Visible Alpha.

DeepSeek scare

NVIDIA investors got a scare in early February after China-based DeepSeek launched a competitive large language model that it claimed was developed with just about $6 million. Since then, multiple executives, including Microsoft Corp. CEO Satya Nadella, have said that cheaper models increase demand for computing power instead of reducing it. NVIDIA CEO Jensen Huang echoed similar sentiments, saying DeepSeek's innovations will lead to greater demand for AI hardware.

"We believe demand is far outstripping supply with Blackwell in the field and after speaking with many enterprise AI customers, we have seen not one AI enterprise deployment slow down or change due to the DeepSeek situation," Dan Ives, an analyst at Wedbush Securities, said in a recent note to clients. "No customer wants to 'lose their place in line' as it is described to us for NVIDIA's next-generation chips."

GenAI adoption high

Companies are seeking to adopt AI technologies but are also mindful of the challenges. Together with data privacy and security, organizations cited cost as one of the biggest challenges preventing greater generative AI adoption, according to a survey of organizations by S&P Global Market Intelligence 451 Research.

"It's very expensive to build AI applications. Organizations are optimizing how much cloud computing they are using. The cost needs to come down," Visible Alpha's Otto said.

The 451 Research survey also found that organizations see AI as imperative, with 100% of companies either having implemented or planning to implement AI in the next 12 months.

Training vs. inference

Big Tech companies have increased their capital expenditure commitments for 2025, with Microsoft Corp., Meta Platforms Inc. and Alphabet Inc. planning to register a capex of $220 billion this year. A good chunk of that will be for NVIDIA server chips, although these companies are now also looking to develop chips in-house based on the application-specific integrated circuit architecture.

One major question for NVIDIA investors is how a market shift toward inference use cases will impact demand for Blackwell chips. Inference is the stage in which trained AI models are fed inputs such as prompts to generate new output. Inference comes after AI training, which is a more computationally intensive process.

Some observers expect hyperscalers will continue to rely on NVIDIA chips for training and then broaden out the chips used for inference use cases, including designing their own. Not coincidentally, however, Blackwell is geared toward inferencing applications, as NVIDIA seeks to capture this high-growth market. Blackwell is reported to be four times better at training and 30 times faster at inference compared with the previous Hopper generation.

"NVIDIA is talking up the increase in performance for inference from Blackwell — but I think training is still its sweet spot," said John Abbott, a semiconductor analyst at 451 Research. "For very high performance, where other factors matter less, Blackwell would be an option — but most inference tasks don't need such a high powered, expensive and currently hard-to-get-hold-of chip."