Featured Topics

Featured Products

Events

S&P Global Offerings

Featured Topics

Featured Products

Events

S&P Global Offerings

Featured Topics

Featured Products

Events

S&P Global Offerings

Featured Topics

Featured Products

Events

Financial and Market intelligence

Fundamental & Alternative Datasets

Government & Defense

Professional Services

Banking & Capital Markets

Economy & Finance

Energy Transition & Sustainability

Technology & Innovation

Podcasts & Newsletters

Financial and Market intelligence

Fundamental & Alternative Datasets

Government & Defense

Professional Services

Banking & Capital Markets

Economy & Finance

Energy Transition & Sustainability

Technology & Innovation

Podcasts & Newsletters

Research — Feb 27, 2025

By Kelly Morgan, Johan Vermij, and Dan Thompson

Hangzhou DeepSeek Artificial Intelligence Co. Ltd. is a Chinese AI lab that has been developing code-generation and large language models since mid-2023. The firm's large language model V3 was released in December 2024, but a reasoning model launched in January (R1) caught the attention of developers and investors alike. The model is open source and performs well against similar models, but was reportedly trained using only 2,048 NVIDIA H800 GPU chips (compared with OpenAI LLC's GPT4, which was trained in 2022 using an estimated 20,000 A100 GPUs), and therefore at much lower cost than other foundation models. Using fewer, lower-powered GPU chips means less energy may be required, and calls into question whether many of the datacenters planned with the previous models in mind will be needed, potentially reducing the demand for energy from utilities as well.

Since the launch of ChatGPT, the amount of infrastructure required for AI over the long term has been anyone's guess. The largest IT and AI firms, particularly in the US, have seemed to lean toward overbuilding rather than being caught without capacity, making investors nervous about the returns on these AI investments. This accounts (in part) for the investor response to the DeepSeek model as if a bubble were bursting. That may be true, and there are still major unknowns about AI's use in society, as well as the resources it will require. However, there are always highs and lows as a new technology takes off. Many of the trends behind the growing demand for datacenters are still in place, and although there is always the potential for overbuilding, the actual construction of facilities is much faster than during previous eras of overbuilding. The industry is more flexible now, and can respond to overcapacity fairly quickly and stop building, although top builders may lose some money. The same cannot be said for large-scale power plants, though, with their much longer time horizons.

Added datacenter capacity — all for AI?

Since the launch of ChatGPT in November 2022, planned datacenter builds have soared, with builders requesting power feeds from utilities throughout the US at a level not seen before. This was starting to happen outside the US as well. Does the launch of DeepSeek's model mean that datacenters will sit around empty? That could depend on what amount of the projected datacenter additions was expected to be for GPUs. In 451 Research's GPU Impact on Datacenters Market Monitor & Forecast, we estimate that datacenters plan to add 15-18 GW per year globally from 2025 to 2029, with 30%-40% of that capacity expected to be built to house GPU chips (of various types) for AI workloads. The remaining percentage is estimated to be for other types of equipment — CPU chips used for AI, storage, networking and IT equipment used for non-AI workloads (e.g. typical cloud deployments). Not all of these GPU chips were expected to be used for developing large language models. AI providers (and analysts) have been expecting a shift — from training very large language models to training and maintaining some of those (but a growing number of smaller specialized models), while inferencing becomes a larger percentage of AI workloads. If DeepSeek's open-source model requires fewer high-powered chips and potentially less capital to train, it may accelerate the move toward smaller, more distributed models.

Distributed, smaller AI models may mean that less datacenter capacity will be needed in very large-scale centralized locations, and that perhaps the current top AI companies will not need as much capacity as they think. The top AI and IT firms already seem to be trying to err on the side of overbuilding rather than being caught without capacity (and losing out to competitors as a result) in a "spend what it takes in order to grow" approach. One of the potential constraints on capacity looked like it would be the availability of electricity, with the potential knock-on effect of power companies overbuilding as well.

Are companies overbuilding?

A central point of critique when debating whether the tech giants are overbuilding (or already have) generally involves concern over future efficiency gains, whether in hardware and/or software, which could reduce the need for so much infrastructure. DeepSeek could be an example of that, and since the company is offering it as open source, this leads to another common critique of AI initiatives, that of long-term financial viability.

Since the advent of the computer, there have existed technological bottlenecks, whether processing power, memory speed/capacity, storage speed/capacity, etc., in addition to software's ability to properly harness hardware advancements. As a bottleneck was removed, advancements were made, and more bottlenecks would appear. For general-purpose computing, it is true that systems all over the world sit underutilized, which one could argue is a sign they have been overbuilt. Companies continue to build out new infrastructure, which may also be ultimately underutilized or not fully utilized. However, current system utilization is not a good indicator of future demand.

In the world of GPU-based computing, we are still at the beginning of that curve. Companies continue to find new uses for AI, while the training of models to obtain results continues to take long periods of time to complete — weeks, or months in some cases. There seem to be endless queues of jobs to be run. It is true that there is still plenty of opportunity for optimization, but there is currently no shortage of new ideas and use cases. At this stage, it seems far more likely that efficiency gains, such as those from DeepSeek, will simply be a catalyst for leaps forward, rather than the buzzer at the end of the game. So, while DeepSeek claims to have created a way to do a lot with less, US IT firms will continue the race as well, building on those advancements, but with remarkably more compute power.

In addition, the large public-cloud providers have a bit of a backup plan. If hundreds or thousands of smaller models are operating and inferencing is growing, the public cloud model of shared infrastructure could be an appealing option for smaller companies running AI. And if the AI use case does not require inferencing to happen with very low latency, that infrastructure could still be in centralized datacenters. There will still be benefits to having equipment in larger, more interconnected facilities that have economies of scale and the ability to obtain green energy plus other sustainability benefits.

So, there could still be plenty of demand for large-scale datacenters, they may just take longer to grow into the campus size originally planned, and there could be concurrent growth of smaller, more distributed facilities. A lot will depend on the use cases for AI and how much AI inferencing will require near-instantaneous response, and therefore distributed smaller amounts of capacity in, say, urban centers as opposed to being centralized in highly efficient green datacenters.

Impact on energy companies

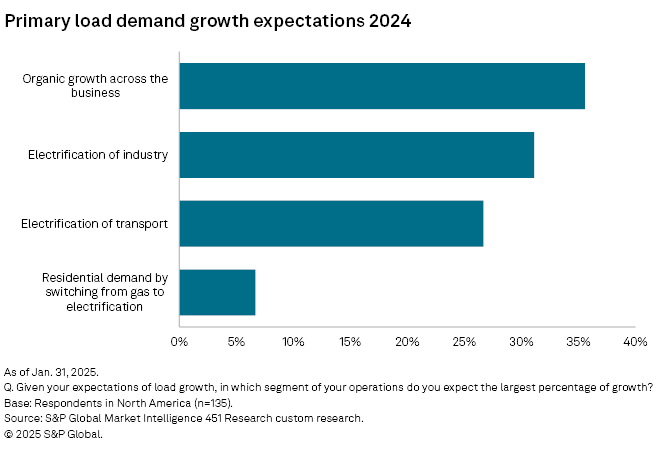

The rapid expansion of AI applications and the resulting surge in datacenter energy consumption clash with the transition to an all-electric society. Utilities already face the monumental challenge of doubling power capacity — without even accounting for the added strain from datacenters. In North America, most utilities anticipate load growth from organic demand, with industrial and transportation electrification further intensifying energy needs.

From this perspective, DeepSeek's metrics are promising. Training a model with comparable performance with just 10% of the number of GPUs, which have a lower energy demand than the GPUs used by OpenAI, could drive AI's net energy demand down by as much as 95%. However, more efficiency and lower inferencing cost will likely accelerate adoption across industries, and open-source models such as DeepSeek or International Business Machines Corp. and Red Hat's Granite will likely shift workloads from cloud to edge. Rather than a centralized AI cluster with a dedicated demand, the energy demand will be equally decentralized.

With the explosive growth of AI, Big Tech, the datacenter industry and utilities have all been challenged to provide power to the increasing demand. Given the intermittency of distributed energy resources, such as wind and solar, and their impact on grid stability, ambitious plans have been launched to revive nuclear plants or commission new small modular reactors to provide enough power and inertia to the grid, backed by the deep pockets of Big Tech. The democratization and decentralization of AI radically changes this business case. However, the argument that Big Tech would pay for the energy transition is a fallacy. While nuclear power may be cleaner than gas or coal-fired thermal power, the most sustainable option still is to drive down overall power demand.

Many enterprises may hesitate to deploy DeepSeek due to IP, privacy or geopolitical concerns, but large industrial players — often committed to net-zero strategies — will likely lead the adoption of similar decentralized AI clusters. By integrating AI's energy needs into their emission reduction plans, these companies enhance their energy resilience through smart spaces and microgrid solutions, balancing consumption with distributed energy and battery storage. This shift does not transfer responsibility for power generation from Big Tech to utilities, but rather to the end user..

This article was published by S&P Global Market Intelligence and not by S&P Global Ratings, which is a separately managed division of S&P Global.

451 Research is a technology research group within S&P Global Market Intelligence. For more about the group, please refer to the 451 Research overview and contact page.

Location

Products & Offerings

Segment